To "get" AI, you have to try the impossible.

Lots of hot air out there about what genAI can and can't do. To get your real answer, you can't just try it out. You have to struggle with it on a task you think is impossible.

“Once i got a grasp, it finally became a tool.”

- Master’s student, reflecting on a quarter of using genAI to build software

The world is obviously awash in commentary about the implications and nature of genAI. Will it kill jobs? Will it create them? Is it too biased? Is it less biased than we are? Did its creators have to steal intellectual property to train it? How fast is it improving? How should we regulate it? Is China going to win the AI arms race, or is the US? When is it good for it to be free, open software like Llama, when a paid, proprietary one like ChatGPT?

It’s critical that we ask these and many other questions, and that our global conversation reflect diverse perspectives. Generative AI is clearly one of the most consequential general-purpose technologies we’ve ever seen, and we need make sure we are informed so we can make wise decisions about it.

The more I’ve consumed the global conversation, the more I’ve concluded there’s a subtle bias in many accounts. One that really threatens our ability to get good data to answer our questions. It’s not about race. Gender. Socio economic status. West vs. east. Occupational differences. Political leanings. Silicon-valley bro-hood.

It’s about skill.

There’s a hump to get over with this technology. A journey of going from 0 to 1. A hard-to-articulate understanding of what it is and what it’s capable of - and a sense of awe (which includes fear) and possibility - that only comes from struggling with it towards something you previously thought of as impossible. Win, lose, or draw, at the end of this kind of work we become more capable, confident, and have something in our bones that helps us make more grounded guesses about what this technology means for us personally and for us as a species.

Most folks don’t go from 0 to 1. They try genAI out on something straightforward, get a bit of an understanding, then think they know about it. And for sure, they do. Knowing how to get a first draft of a convincing email, or an essay, or proofreading help, or explanation of a concept... that’s all important. Useful. But those kinds of tasks don’t really stretch the user or demonstrate the true power of the tool, so we walk away - at best - with a simplistic understanding of what we’ve got in our hands.

For instance, you probably know about genAI “hallucination” by now - the fact that these systems will produce very convincing false information when we ask a question. If you don’t already have some expertise in the domain you’re asking about, you can get hoodwinked. This can even happen if you know that these systems hallucinate! Of course many, many folks still don’t even know this basic fact. They are much more prone to taking genAI’s output as gospel, or waving LLMs off as hallucination machines.

But there’s a deeper layer of expertise about hallucination - one that many of us never build: a fine-grained, intuitive understanding of when and how genAI hallucinates, and what consequences that has for complex work relying on genAI. Sometimes, for instance, hallucination turns out to be very helpful feature - just on a personal level, it can help us check our own knowledge, provide creative ideas, or motivate us to learn more. To pick another example of this shallow-vs-deep skill problem: some of us might know by now that ChatGPT (and other LLM-enabled tech) will give you better results if you tell it to “take a deep breath”, or “think step by step.” Good. Important that some of us know this. But that’s not the same as understanding why and how that works - knowing how to program social machines. You might not be able to say what you know about prompting, but you know how to weave psychology and sociology into your prompts to get better results - and what bullshit on that topic sounds like.

There’s a whole line of research on this deeper kind of expertise - the kind that allows you to respond productively to surprises and uncertainty, the kind that helps you judge cause and effect in complex situations, the kind where the tool starts to feel like a part of you, but you get its limitations and possibilities in a way that’s hard to articulate with words. If you want to learn more here, you could do way worse for yourself than to read Doug Harper’s magical “Working Knowledge: Skill and Community in a Small Shop”. Doug was an ethnographer and a photographer, so the book’s filled with detailed, brilliant photos that show him apprenticing to Willy, a master blacksmith, mechanic, and tinkerer in an upstate NY repair shop.

Science on this like Doug’s book tells us: you’ll only get to deep skill with genAI by persisting with it on a complicated problem. Success and failure are secondary - you learn a lot by trying, figuring out what works, what doesn’t. What’s possible. And that’s a moving target, because the more you try, the better you get at it all.

New findings: learning to code in three weeks

The rest of this piece is more or less devoted to proving the above points by giving you a first-in-the-world peek at data from an educational experiment I ran in the fall with massive help from the amazing Brandon Lepine, a PhD student in my Technology Management department here at UCSB.

For the first phase of this project, we asked students to write working python code (aka create software) to analyze a complex dataset. We gave them three weeks (in the midst of a full curriculum of other coursework). And we told them to work alone. No help, no tutorial from us, no googling. Just you, ChatGPT or Bing, and the assignment. Figure it out.

Here’s the rub. They didn’t know how to code:

So out of a class of 26, 3 were professional software engineers, 3 could “do some okay stuff” with code, but 20 (77%) had no or next to no skill. In fact these numbers concealed a fair bit of terror at the prospect of this assignment. A significant chunk of the students who rated themselves as a “1” had deliberately avoided coding throughout their educational and work experience. Some hadn’t ever opened a terminal window on any computer, ever. Some didn’t even know what the word “Python” meant if it wasn’t an animal. They weren’t kidding with that “1”. From the point of view at the beginning of this piece, they were a hard 0.

We made them try anyway.

Students had to use genAI to write code that would analyze an open dataset about multiple construction projects (e.g., “Report the total number of open and closed tasks by task group”) and visualize that analysis (e.g., “Create a bar chart of the total number of overdue tasks by project.”). Alone.

This was a task that most of them would have thought was impossible, if they saw it before coming to our Master’s program. They wouldn’t have even considered trying. So this is totally unlike saving time on a writing task with a first draft, or doing some online research. That’s a task you know you could do, more or less well, and you turn to genAI for a productivity and/or quality boost. For many of my students - and most adults out there in the world - this task was the equivalent of brain surgery.

Here’s how we turned this wall into a hill: the bulk of their grade depended on the “quality” of their interactions with generative AI. I judged this by three criteria: candor (did they honestly and precisely say what they needed), grit (did they hang in there and keep dealing with problems until they were fixed), and creativity (did they find new ways to look at the problem that allowed for better results, did they ask clear, relevant, and useful questions). My working theory was if they were honest and specific about what they wanted, hung in there, and played around with some curiosity, they’d win. Yes, they’d get a correct answer on the assignment (almost everyone did), but more importantly they’d build their own skill and confidence and get a much richer, more “in the bones” sense of what generative AI could do for them.

Despite all this “help” (some felt like it wasn’t enough), students found this work difficult:

Once they got through that solo work, we put them in teams in phase two, and they had to write software that was genuinely usable by real technical project managers to solve a pressing problem in their everyday work. And they had to test it with real users, modify it to incorporate their feedback, then demonstrate their working system to the class.

Click here for the entire assignment - Phases 1 and 2, including the timeline, tasks, grading criteria. Please let me know if you use it, or if you have suggestions - I’d love to hear what you learn!

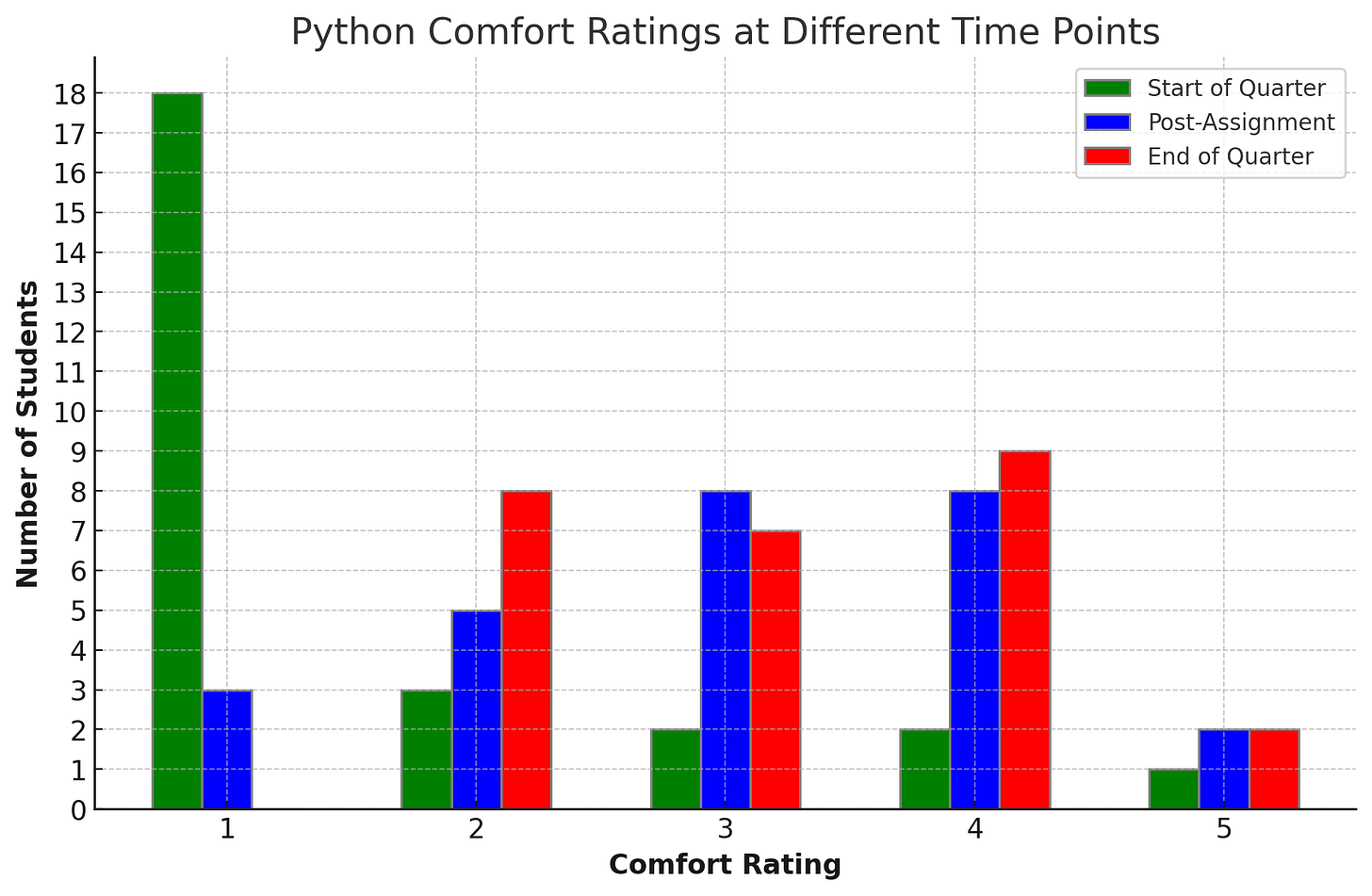

But the main point - and the thing that literally got my jaw to drop - was students’ own assessment of their comfort using Python to solve problems. I won’t spoonfeed this to you - take a look:

In the beginning of the quarter, most (18/26, or 69% - figures in the green bars) were “very uncomfortable” with Python. Again, this was a massive understatement for many. After just three weeks, most (again, 69%, figures in blue bars) were between “neutral” to “very comfortable” with Python. You could say they were lying, but their chat transcripts, work output, code told the tale: the average grade for Phase one was 95.7%. They’d gotten it. It might not be their go-to recreational activity on a Saturday evening, but they could make a digital machine to do complex work for themselves, where before they wouldn’t have even thought to try. Brandon and I were gobsmacked. We expected some improvement in comfort, but nothing like this.

I would have rated myself a “2” on this scale before the course began, by the way.

I took coding courses at MIT, and these relied on Python, but I hadn’t touched it since. I had worked around plenty of engineers at my startup and as I studied and collaborated with roboticists, so I knew about coding languages, embedded software and sensor fusion, understood basic principles of good software engineering, knew about the broader infrastructures required to create and run code (e.g., GitHub), had learned Mathematica a bit, too, but I couldn’t write you a useful webapp to save my life.

Then I did. In two days. With no help.

Here’s a tweet thread with the whole story, but in early September I realized I needed to overhaul the tool my Project Management students used to run monte carlo simulations (basically running a project plan hundreds or thousands of times with some random fluctuations in the length of each task). Instead of buffing it up in Excel again with the help of someone on Upwork - like I did every year, I decided to create a free, functioning, online app that did the same thing.

I knew ChatGPT was conversational. I knew it could code. I knew it *should* know about the work practices and tools associated with creating and posting a python-based app online. So I started.

Two ten-hour days later, I was done. The app was running. I was stupefied. Here’s the entire 219-page transcript of my interactions with ChatGPT, from noodling about the idea to deciding to try for it, to debugging (which I had to do offline, that wasn’t yet built into ChatGPT), to getting it posted on a service called Heroku.

About halfway through day two of this work I realized *this* was the new core of my fall course. Project management was the foil. If I could do this in two days, then students could do it in 10 weeks, especially if they collaborated. And they’d have a huge leg up on MBA-style students without this experience.

Roughly, that very much turned out to be true. But that doesn’t mean it was easy, or fun.

They struggled:

“It was a little difficult for me to understand how to set up Vs code and the GitHub account. so I had to go back and forth with GPT, as it doesn't really give you step-by-step details for a beginner to understand it right away… sometimes it used to be a little frustrating when I used to paste the error that I had in the code, and still again I wasn't able to sort the problem out. I had to really sort a few things yourself.”

Maybe the grading criteria nudged them in this direction, maybe they didn’t. But the students hung in there, asked for what they needed, and ultimately came out on top.

More to the point, they’d learned a *ton* about how to interact with these systems, what they were, when they made things harder, when they made things easier… and they’d been absolutely wowed at what they could do with this thing in their hands. Here are a few comments in that vein after the dust settled:

“Knowing that I can do things I never could before was extremely gratifying.”

“When you see your code, come to life and actually work, it is an amazing feeling. To see something turn in to a working calculator from code almost made me jump out of my chair with joy.”

“I found the use of Chat to build our tool to be a fantastic component of the project. We were able to focus much more on what we wanted to do rather than whether or not we could.”

“When I realise it has become a strength to create something that I envisioned, I just couldn’t stop doing it.”

That’s not to say it was all positive. But that’s the point, too. For some it helped them clarify their concerns about the technology, but these were also much better informed through practice:

“I don’t think this qualifies me to work at a software company, but I guess I could gaslight them into employing me.”

“You must have a baseline knowledge of what you're asking, because it may give you wrong answers without your knowledge. You must always fact-check and proofread the responses.”

It’s one thing to know these things in theory. It’s another to have tried, failed, tried again, failed again, troubleshot, given up in frustration, come back, expressed that frustration to the very system you’re using to do the work, get better results, check them yourself, then… finally, finally… run your code and see it produce professional-grade results. You know these lessons on a different - much deeper level.

When asked what a potential employer should know about their work in this project:

“I think they should know how fast we all picked up Python after using Chat GPT, and how we were all able to make PM software in that language within 10 weeks, which is crazy!”

“We made a functional and useful product by the end. From past experiences with group projects, the final deliverable is usually underwhelming, but in this instance it was exactly what I hoped.”

“That we did it in the time frame, which initially seemed nearly impossible.”

Not bad. Not bad at all.

We have a raft of improvement ideas for the experience - a true gift from an engaged group of students. I’m confident next year’s students will have a better learning experience as a result, and that they’ll bring even more relevant capability to their new employers or ventures after they leave our program. Perhaps most importantly, they’ll be in a great position to help their new colleagues, leaders, and customers make more informed decisions about what genAI is, what we can do with it, and what to watch out for. That’s probably far more valuable than any actual coding skill they have.

We have too much skill-free critique

It’s of course hard to verify, but my strong intuition is that many of those who issue harsh critiques about generative AI have not gone from 0 to 1. They haven’t put it to work on something they thought was impossible, tailored their genAI interactions to suit their needs, persisted through adversity, taken creative or even playful routes forward, and gotten a result. Nor have they then worked in a group of people to produce a collaborative outcome.

It’s obvious that going from 0 to 1 would not change everyone’s views on everything. That’s not the point. Many of us would clearly retain concerns about ethics, bias, intellectual property, profiteering, control, inequality, and even skill - and we need healthy critiques of generative AI to move forward wisely. But those critiques would be radically more informed about the practical, real possibilities, limitations, and threats associated with deep use of these tools - in a way that was tied to the critiquer’s sense of confidence and skill. Suggestions would be more nuanced. Limitations would be described more precisely, allowing for healthier fixes.

But maybe most importantly, going from 0 to 1 gives each of us a profound sense of what’s possible with this technology, and that we can turn it on a dizzying range of very complicated problems that before we would have treated as impossible.

One of those problems is genAI itself. We’ve essentially acquired the equivalent of undifferentiated electricity and sockets, delivered to billions of households in an instant. Of course that’s going to create massive problems. Just letting those run their course is totally unacceptable. But if each of us takes a little time and invests a bit of sweat equity, we can become conversant in this new mode of getting things done in the world, and we can help guide the global conversation more responsibly.

Want to get in on the action?

If you don’t know how to code, and especially if you’re fearful of it, do phase one yourself.

That’s right. Click on that assignment above. Read through the requirements of phase one. Follow them to the letter. Or if you want a different challenge - let’s say you know python - just modify the assignment to push you. Don’t know C++? Java? Make yourself do it there. Don’t want to analyze construction data? Find some data that mean something to you. Or create your own data.

The main thing is: make sure the task feels nearly impossible, not just difficult. And don’t let yourself get help, aside from either Bing chat in creative mode or ChatGPT (with GPT-4 enabled). Stay signed in so you can save your transcripts. Send them along, if you’d like - heck, I shared mine!

Be candid. Gritty. Creative. No matter the outcomes, you’ll get results you didn’t think possible, and you’ll become a skill-informed global citizen at a time when we need to be making better decisions than ever.

Loved this!